D

-

The forum software that supports hummy.tv has been upgraded to XenForo 2.3!

The forum software that supports hummy.tv has been upgraded to XenForo 2.3!

Please bear with us as we continue to tweak things, and feel free to post any questions, issues or suggestions in the upgrade thread.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Mono from Stereo

- Thread starter Black Hole

- Start date

Ezra Pound

Well-Known Member

I think you have set up the wrong parameters for your sawtooth waveforms, they are both virtually square wavesOK, 60Hz sawtooth to both ears, same intensity. One signal is 30 degrees out of phase with the other. The direction the sounds appear to come from is pretty obvious to me, using headphones.

Here is a saw 30 :- http://ge.tt/4XfF3ho1/v/0

and a saw 330 :- http://ge.tt/5rjW5ho1/v/0

D

Deleted member 473

Thanks, corrected! I wondered why my saw was feeling blunt!

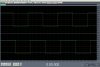

Anyway, the square wave exhibited the effect, but I attach the sawtooth here, generated using the free program WaveShop.

So, we do get directional information from phase differences at low frequencies, but that is well known anyway. It is the reason why you cannot stick your subwoofer anywhere in the room and expect the sound to be correct.

Try the same at 600Hz and it is impossible to localize the sound.

Doesn't some mono to false stereo conversion rely on phase changes? Also, I may have imagined it, but don't sound bars work on the same principle? (I am sure BH will jump on this if I am wrong!)

Anyway, the square wave exhibited the effect, but I attach the sawtooth here, generated using the free program WaveShop.

So, we do get directional information from phase differences at low frequencies, but that is well known anyway. It is the reason why you cannot stick your subwoofer anywhere in the room and expect the sound to be correct.

Try the same at 600Hz and it is impossible to localize the sound.

Doesn't some mono to false stereo conversion rely on phase changes? Also, I may have imagined it, but don't sound bars work on the same principle? (I am sure BH will jump on this if I am wrong!)

Attachments

Ezra Pound

Well-Known Member

Black Hole

May contain traces of nut

Why? I don't have any specific knowledge of what's right or wrong, I'm trying to pin it down through proper scientific investigation.(I am sure BH will jump on this if I am wrong!)

How do you come to this assertion? I know the waveform will no longer look like a sawtooth, but will the ear perceive it any different?The sawtooth has a unique Fourier transform. If you start adding phase differences to the terms in the Fourier series, you won't in general get a sawtooth any more, and it will sound different. Hence, the ear is detecting phase differences between the frequencies it extracts.

Trev

The Dumb One

Depends on how much you change it from a pure sawtooth. Obviously you can hear the difference between a sine wave, sawtooth and square wave of the same frequency, so you should be able to detect the difference in sound between different shaped sawtooth waveforms, but I suspect it will be only if they are 'quite different'.

Black Hole

May contain traces of nut

I think you are missing my point. The difference between sine, square, saw etc is in the contribution the harmonics make. Different amounts of the various harmonics, resulting in a different sound quality to each wave shape. My question is whether changing the relative phase of the harmonics without changing their power will be audible (even though the overall waveform will look different on a 'scope display).

An easy test will to be to generate two sawtooth waves (not triangle, which is symmetrical) at the same frequency and amplitude, one with a sharp leading edge and the other with a sharp trailing edge. By making one essentially a time-reversed version of the other, the amplitudes of the harmonics will be the same but the phases will not. I'll do this later.

An easy test will to be to generate two sawtooth waves (not triangle, which is symmetrical) at the same frequency and amplitude, one with a sharp leading edge and the other with a sharp trailing edge. By making one essentially a time-reversed version of the other, the amplitudes of the harmonics will be the same but the phases will not. I'll do this later.

Black Hole

May contain traces of nut

OK - I'm not saying what I hear in these tests (I'm not sure how good my hearing is), but see what you make of them:

First test file (because it was relatively simple) is a 20-sec blast of alternating 2-sec tones: one tone is the fundamental (440Hz) third and fifth harmonic contributions that would build a square wave if continued to infinity; the other tone has the same harmonics at the same power, but the third harmonic is phase shifted 180 deg so the waveform looks more like an approximation to a triangle. If you can't honestly tell them apart, then the conclusion is that, at least within the parameters of this test, the human ear-brain combination is not sensitive to relative phases of harmonics.

https://dl.dropbox.com/s/wrvzjyhfwqu8kla/Test1.zip

Second test file: tests 2a, 2b, 2c contained within one zip. Test2a.wav is three bursts of 440Hz tone (as per above) where the left and right channels are in phase and the only variation is in the envelope defining the amplitude of the burst over time. The first burst delays the envelope on the right channel compared with the left by 0.3ms (equivalent to a path difference of about 4 inches), second burst is in step, and the third burst delays the left envelope by 0.3ms.

Test2b.wav uses the same tones, but keeps the envelopes in step and varies the phase of the tones within the envelopes, in the first burst the phase is delayed by 0.3ms in the right channel, then zero delay, then 0.3ms in the left channel.

Test2c.wav keeps the phases and the envelopes in step, and only varies the relative amplitudes by +0.5dB, 0dB, -0.5dB.

https://dl.dropbox.com/s/r1jg8sg5jxguh6t/Test2.zip

If you perceive the stereo image to pan from left to right in any or all of these samples, it demonstrates that only one piece of information is necessary to fool the ear-brain combo into creating the image. Obviously, a real source would have a combination of all three - the envelope and the phase would reach the ears at different times, and the relative amplitudes would be different too.

Only binaural recordings (intended for listening through headphones) can accommodate all three. Stereo recordings for playback through a pair of speakers will have cross-talk between the two ears, and typical recordings made by simply mixing multiple mono mic sources into a left-right sound field will only be using relative amplitude to provide the stereo image.

For completeness, here's the "Black Hole's Noise" I mentioned above - pure sine tones with octave separations are constantly increasing in pitch but with a frequency envelope so that they fade in at the low frequency end and fade out at high frequencies. This results in a tone of infinitely increasing pitch.

https://dl.dropbox.com/s/9x5c6l3a0ccc4t9/Ipcres.zip

First test file (because it was relatively simple) is a 20-sec blast of alternating 2-sec tones: one tone is the fundamental (440Hz) third and fifth harmonic contributions that would build a square wave if continued to infinity; the other tone has the same harmonics at the same power, but the third harmonic is phase shifted 180 deg so the waveform looks more like an approximation to a triangle. If you can't honestly tell them apart, then the conclusion is that, at least within the parameters of this test, the human ear-brain combination is not sensitive to relative phases of harmonics.

https://dl.dropbox.com/s/wrvzjyhfwqu8kla/Test1.zip

Second test file: tests 2a, 2b, 2c contained within one zip. Test2a.wav is three bursts of 440Hz tone (as per above) where the left and right channels are in phase and the only variation is in the envelope defining the amplitude of the burst over time. The first burst delays the envelope on the right channel compared with the left by 0.3ms (equivalent to a path difference of about 4 inches), second burst is in step, and the third burst delays the left envelope by 0.3ms.

Test2b.wav uses the same tones, but keeps the envelopes in step and varies the phase of the tones within the envelopes, in the first burst the phase is delayed by 0.3ms in the right channel, then zero delay, then 0.3ms in the left channel.

Test2c.wav keeps the phases and the envelopes in step, and only varies the relative amplitudes by +0.5dB, 0dB, -0.5dB.

https://dl.dropbox.com/s/r1jg8sg5jxguh6t/Test2.zip

If you perceive the stereo image to pan from left to right in any or all of these samples, it demonstrates that only one piece of information is necessary to fool the ear-brain combo into creating the image. Obviously, a real source would have a combination of all three - the envelope and the phase would reach the ears at different times, and the relative amplitudes would be different too.

Only binaural recordings (intended for listening through headphones) can accommodate all three. Stereo recordings for playback through a pair of speakers will have cross-talk between the two ears, and typical recordings made by simply mixing multiple mono mic sources into a left-right sound field will only be using relative amplitude to provide the stereo image.

For completeness, here's the "Black Hole's Noise" I mentioned above - pure sine tones with octave separations are constantly increasing in pitch but with a frequency envelope so that they fade in at the low frequency end and fade out at high frequencies. This results in a tone of infinitely increasing pitch.

https://dl.dropbox.com/s/9x5c6l3a0ccc4t9/Ipcres.zip