MymsMan

Ad detector

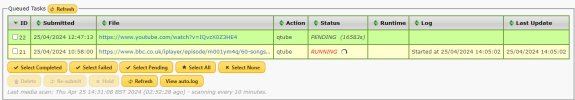

Glasgow local news downloading nowPackaging error. I'd done it right on my manual update test for all three files, but then forgot to update the build script completely.

Beta package updated. If you could test and confirm...

Glasgow local news downloading nowPackaging error. I'd done it right on my manual update test for all three files, but then forgot to update the build script completely.

Beta package updated. If you could test and confirm...

m3u8 in the supports() methods in youtube_dl/downloader/external.py). However, ISTR that iPlayer is unusually lenient in this regard and if that's still the case then --hls-prefer-native could mean lower system load when fetching BBC shows.23/02/2024 21:41:46 - Caught error: ERROR: unable to download video data: <urlopen error [Errno 145] Connection timed out>

23/02/2024 22:00:33 - Caught error: ERROR: unable to download video data: <urlopen error [Errno 145] Connection timed out>Mozilla/5.0 since there is no good use that a server could make of the UA string (now that other ways exist to discover client characteristics).Perhaps it ought to be. Or, more strongly, probably it ought to be.There is a default retry count of 10 for things that the code expects to be resolved by retrying. A timeout on connecting is, IIRC, not one of those.

Is this in yt-dl somewhere?I think all clients should send justMozilla/5.0

Presumably you mean 1.1.1w as we are already on 1.1.1d?Or maybe upgrading to OpenSSL 1.0.0w and rebuilding wget would have an effect.

infinite the --socket-timeout ... but I'd say the default 600s ought to be plenty. Actually the --retries ... (there's --fragment-retries ... too) is only used in fetching the media data, although an extractor could implement its own retry mechanism using the same parameter.--user-agent 'Mozilla/5.0', but I'm sure lots of sites will complain if they're not fed any OS and Gecko data. Maybe not BBC.Indeed it should, but this seems not to be the factor at play:You can increase or makeinfinitethe--socket-timeout ...but I'd say the default 600s ought to be plenty.

24/02/2024 18:28:33 - [download] 48.7% of ~2.22GiB at 1.61MiB/s ETA 12:02

24/02/2024 18:28:34 - [download] 48.7% of ~2.22GiB at 1.67MiB/s ETA 11:35

24/02/2024 18:28:34 - [download] 48.7% of ~2.22GiB at 1.67MiB/s ETA 11:35

24/02/2024 18:31:44 - Caught error: ERROR: unable to download video data: <urlopen error [Errno 145] Connection timed out>I stupidly tried to download a 3hour+ prog. I downloaded a working MP4, but didn't check if it's complete. My log is:One thing that can happen is that the queued command gets stuck at the ffmpeg run to fix the AAC bitstream, which runs out of memory and crashes (or the OS does and kills it), causing the queue to restart the command. Picture issues may also accompany this.

Adding a virtual memory paging file of sufficient size (256MB worked for me) using the swapper package allows the command to complete.

But maybe those are not the symptoms you're seeing?

kill it (pkill ffmpeg). That will cause the job to fail. 5000 [mp4 @ 0x4c73f0] Invalid DTS: 118449000 PTS: 118447200 in output stream 0:0, replacing by guess

4999 [hls,applehttp @ 0x45a100] Invalid timestamps stream=1, pts=119347200, dts=119349000, size=15488

4998 [mpegts @ 0x499c00] Invalid timestamps stream=1, pts=119349000, dts=119350800, size=15601

4997 [mp4 @ 0x4c73f0] Invalid DTS: 118447200 PTS: 118445400 in output stream 0:0, replacing by guess

4996 [hls,applehttp @ 0x45a100] Invalid timestamps stream=1, pts=119345400, dts=119347200, size=18258

4995 [mpegts @ 0x499c00] Invalid timestamps stream=1, pts=119347200, dts=119349000, size=15488

4994 [mp4 @ 0x4c73f0] Invalid DTS: 118445400 PTS: 118443600 in output stream 0:0, replacing by guess

4993 [hls,applehttp @ 0x45a100] Invalid timestamps stream=1, pts=119343600, dts=119345400, size=18230

4992 [mpegts @ 0x499c00] Invalid timestamps stream=1, pts=119345400, dts=119347200, size=18258Download did complete 2GB and 120MB of log messages"Yeah, it does that."